DevTernity in beautiful Riga, Latvia was a great conference and I had good fun delivering my session called "Advanced Mixed Reality Development and Best Practices".

The presentation covered best practices we learned while developing for the HoloLens since 2015 and my Top 10 HoloLens Developer Recommendations 2018. I also talked and demoed exciting new things with MR + AI showcasing near real-time object recognition running on the HoloLens and leveraging WinML for on-device Deep Learning inference. You can see a video of the demo embedded at the bottom of this post. I also showed how the new RS5 Windows 10 update brings hardware-accelerated inference to the HoloLens via DirextX 12 drivers.

The slide deck can be downloaded here. It's quite large with embedded videos and I recommend to download it.

The WinML object recognition demo source code targeting RS5 APIs is here.

The session was recorded. You can watch it here or embedded below:

Also, here's a video recording of my WinML object recognition demo targeting industrial scenarios:

Monday, December 3, 2018

Monday, November 19, 2018

The Sky is NOT the limit - Content for the European Space Agency ESA Keynote and Talks

The European Space Agency ESA invited me a while ago to give a keynote for the Visualisation and Science Communication track at the first ever Φ-week conference. I also was the chair for the track and made sure speakers were on time, managed questions, etc. Plus I gave another session at the VR/AR workshop and helped with the closing panel discussion that proposed advises how the ESA could leverage VR/AR in an earth observation context.

I felt really honored the ESA invited me to keynote their first event and I had a great time at the ESA ESRIN facility in beautiful Frascati which is near Rome, Italy.

The ESRIN is planning another Φ-week conference for 2019, so keep an eye out in case you want to go there as well.

My keynote "Beam me up, Scotty! Teleporting people and objects via 3D holographic livestreaming." showed how Immersive Telepresence and holographic 3D communication will play an important role for space travel and colonization. Of coursed it included our HoloBeam technology and how it is making science-fiction a reality, enabling those use cases. I also showed some AI Deep Learning research we are running for various, related scenarios and gave an outlook for future holographic projection research.

My other talk was titled "Look, holograms! A short introduction to Mixed Reality and HoloLens." and was exactly that. I also performed live demos of some of our HoloLens apps.

The ESA ESRIN also had two sketch artists on-site who created a funny, little summary of the Visualisation and Science Communication track that I chaired and gave the keynote for.

The slide deck for my keynote and the other talk can be downloaded here. Both are quite large with embedded videos and I recommend to download it.

The keynote was live streamed and recorded. You can watch it here or embedded below (the stream starts 5 minutes into the video):

I felt really honored the ESA invited me to keynote their first event and I had a great time at the ESA ESRIN facility in beautiful Frascati which is near Rome, Italy.

The ESRIN is planning another Φ-week conference for 2019, so keep an eye out in case you want to go there as well.

My keynote "Beam me up, Scotty! Teleporting people and objects via 3D holographic livestreaming." showed how Immersive Telepresence and holographic 3D communication will play an important role for space travel and colonization. Of coursed it included our HoloBeam technology and how it is making science-fiction a reality, enabling those use cases. I also showed some AI Deep Learning research we are running for various, related scenarios and gave an outlook for future holographic projection research.

My other talk was titled "Look, holograms! A short introduction to Mixed Reality and HoloLens." and was exactly that. I also performed live demos of some of our HoloLens apps.

The ESA ESRIN also had two sketch artists on-site who created a funny, little summary of the Visualisation and Science Communication track that I chaired and gave the keynote for.

The slide deck for my keynote and the other talk can be downloaded here. Both are quite large with embedded videos and I recommend to download it.

The keynote was live streamed and recorded. You can watch it here or embedded below (the stream starts 5 minutes into the video):

Labels:

3D,

AI,

Augmented Reality,

Deep Learning,

Deep Neural Networks,

DNN,

ESA,

holobeam,

hololens,

mixed reality,

ML,

MR,

Science,

Space,

speaker,

speaking,

talk

Friday, September 28, 2018

Content for the Digility Session - Advanced Mixed Reality Development and Best Practices

I had a good time at the Digility conference in Cologne delivering my session "Advanced Mixed Reality Development and Best Practices".

The presentation covered best practices we learned while developing for the HoloLens since 2015 and my Top 10 HoloLens Developer Recommendations 2018. I also talked and demoed exciting new things with MR + AI showcasing near real-time object recognition running on the HoloLens and leveraging WinML for on-device Deep Learning inference. You can see a video of the demo embedded at the bottom of this post. I also showed how the new RS5 Windows 10 update brings hardware-accelerated inference to the HoloLens via DirextX 12 drivers.

The slide deck can be downloaded here. It's quite large with embedded videos and I recommend to download it.

The WinML object recognition demo source updated to RS5 APIs is here.

The session was recorded and the video is available here and embedded below:

Also, here's a video recording of my WinML object recognition demo targeting industrial scenarios including an Easter egg:

The presentation covered best practices we learned while developing for the HoloLens since 2015 and my Top 10 HoloLens Developer Recommendations 2018. I also talked and demoed exciting new things with MR + AI showcasing near real-time object recognition running on the HoloLens and leveraging WinML for on-device Deep Learning inference. You can see a video of the demo embedded at the bottom of this post. I also showed how the new RS5 Windows 10 update brings hardware-accelerated inference to the HoloLens via DirextX 12 drivers.

The slide deck can be downloaded here. It's quite large with embedded videos and I recommend to download it.

The WinML object recognition demo source updated to RS5 APIs is here.

The session was recorded and the video is available here and embedded below:

Also, here's a video recording of my WinML object recognition demo targeting industrial scenarios including an Easter egg:

Labels:

3D,

AI,

Augmented Reality,

Deep Learning,

Deep Neural Networks,

DNN,

holobeam,

hololens,

mixed reality,

ML,

MR,

speaker,

speaking,

talk

Friday, June 22, 2018

Content for the Unite Berlin AutoTech Summit Session - Industrial Mixed Reality

The Unite Berlin AutoTech Summit was amazing and I had a great time delivering my session at Unity's largest conference in Europe.

The title of my talk was "Industrial Mixed Reality – Lessons learned developing for Microsoft HoloLens".

The presentation covered best practices we learned while developing for the HoloLens since 2015 and my Top 10 HoloLens Developer Recommendations 2018. I also talked and demoed exciting new things to come with MR + AI showcasing near real-time object recognition running on the HoloLens and leveraging WinML for on-device Deep Learning inference. You can see a video of the demo embedded at the bottom of this post. This is a brand new demo video fitted more for an industrial and automotive context recognizing car parts and tools.

The slide deck can be downloaded here. It's quite large with embedded videos and I recommend to download it.

The WinML object recognition demo source code is here.

The session was recorded and the video is up on Unity's YouTube channel and embedded below.

Also, here's a video recording of my new WinML object recognition demo targeting industrial scenarios including an Easter egg:

The title of my talk was "Industrial Mixed Reality – Lessons learned developing for Microsoft HoloLens".

The presentation covered best practices we learned while developing for the HoloLens since 2015 and my Top 10 HoloLens Developer Recommendations 2018. I also talked and demoed exciting new things to come with MR + AI showcasing near real-time object recognition running on the HoloLens and leveraging WinML for on-device Deep Learning inference. You can see a video of the demo embedded at the bottom of this post. This is a brand new demo video fitted more for an industrial and automotive context recognizing car parts and tools.

The slide deck can be downloaded here. It's quite large with embedded videos and I recommend to download it.

The WinML object recognition demo source code is here.

The session was recorded and the video is up on Unity's YouTube channel and embedded below.

Also, here's a video recording of my new WinML object recognition demo targeting industrial scenarios including an Easter egg:

Labels:

3D,

Augmented Reality,

AutoTech,

Deep Learning,

Deep Neural Networks,

DNN,

holobeam,

hololens,

mixed reality,

MR,

speaker,

speaking,

talk,

unite,

unity,

Unity3D

Wednesday, May 9, 2018

Content for the Microsoft Build 2018 Session: Seizing the Mixed Reality Revolution – A past, present and future Mixed Reality Partner perspective

Build 2018 is a wrap! I had a blast delivering my breakout session at Microsoft's largest developer conference in Seattle.

The title of my talk was "Seizing the Mixed Reality Revolution – A past, present and future Mixed Reality Partner perspective".

I was told I was the only external, non-Microsoft speaker with a full 45 minute breakout session. What an honor.

The presentation covered best practices we learned while developing for the HoloLens since 2015 and my Top 10 HoloLens Developer Recommendations 2018. I also talked and demoed exciting new things to come with MR + AI showcasing near real-time object recognition running on the HoloLens and leveraging WinML for on-device Deep Learning inference. You can see a video of the demo embedded at the bottom of this post.

A funny anecdote: an attendee was wearing the HoloLens during my talk. I saw the white LED was turned on, so I assumed he was recording my session with the HoloLens. I asked him afterwards what he was doing and he was in-fact live streaming my session to his colleague on the east coast using the new Remote Assist app.

The slide deck can be downloaded here. It's quite large with embedded videos and I recommend to download it.

The WinML object recognition demo source code is here.

The session was recorded and the video is up on YouTube and embedded right below:

After the session I was interviewed by Lucas and we talked about why fast Deep Learning inference with Windows Machine Learning is a key technology. Watch it here:

Finally, here's a video recording of my WinML object recognition demo:

The title of my talk was "Seizing the Mixed Reality Revolution – A past, present and future Mixed Reality Partner perspective".

I was told I was the only external, non-Microsoft speaker with a full 45 minute breakout session. What an honor.

The presentation covered best practices we learned while developing for the HoloLens since 2015 and my Top 10 HoloLens Developer Recommendations 2018. I also talked and demoed exciting new things to come with MR + AI showcasing near real-time object recognition running on the HoloLens and leveraging WinML for on-device Deep Learning inference. You can see a video of the demo embedded at the bottom of this post.

A funny anecdote: an attendee was wearing the HoloLens during my talk. I saw the white LED was turned on, so I assumed he was recording my session with the HoloLens. I asked him afterwards what he was doing and he was in-fact live streaming my session to his colleague on the east coast using the new Remote Assist app.

The slide deck can be downloaded here. It's quite large with embedded videos and I recommend to download it.

The WinML object recognition demo source code is here.

The session was recorded and the video is up on YouTube and embedded right below:

After the session I was interviewed by Lucas and we talked about why fast Deep Learning inference with Windows Machine Learning is a key technology. Watch it here:

Finally, here's a video recording of my WinML object recognition demo:

Labels:

3D,

Augmented Reality,

build,

Deep Learning,

Deep Neural Networks,

DNN,

holobeam,

hololens,

mixed reality,

MR,

msbuild,

speaker,

speaking,

talk

Tuesday, April 10, 2018

Microsoft Regional Director

Today, I got an exciting email from Microsoft inviting me into the Microsoft Regional Director program. Needless to say I accepted the invitation. It's a huge honor to join this small group of outstanding experts.

I would like to thank Microsoft, the community and especially all that nominated me for RD.

It was almost exactly 8 years ago when I got the first MVP award and my blog post started with the same words (I only fixed the bug with exiting/exciting :-). Back then I got the Silverlight MVP Award and I moved over to the Windows Phone Development MVPs shortly after doing so much Windows Phone dev. The Win Phone Dev MVPs joined forces with the Client App Dev and Emerging Experiences MVPs a while ago and formed the Windows Development MVP category.

Now, I'm honored to be a Microsoft Windows Development MVP and also a Regional Director.

If you don't know what a Regional Director is, RD website describes the role pretty well:

The Regional Director Program provides Microsoft leaders with the customer insights and real-world voices it needs to continue empowering developers and IT professionals with the world's most innovative and impactful tools, services, and solutions.

Established in 1993, the program consists of 150 of the world's top technology visionaries chosen specifically for their proven cross-platform expertise, community leadership, and commitment to business results. You will typically find Regional Directors keynoting at top industry events, leading community groups and local initiatives, running technology-focused companies, or consulting on and implementing the latest breakthrough within a multinational corporation.

There's also a nice FAQ with more information.

Labels:

AI,

Blogging,

Microsoft,

mixed reality,

MVP,

Open Source,

RD

Thursday, March 22, 2018

AI researchers, wake up!

|

| Picture from Jeff Bezos' Tweet "Taking my new dog for a walk" |

I would say I'm fairly knowledgeable in the AI field staying up-to-date with the research field and currently exploring WinML in Mixed Reality. I also developed my own Deep Learning engine aka Deep Neural Network (DNN) from scratch 15 years ago and applied for OCR. It was just a simple Multi-Layer Perceptron but hey we used a Genetic training algorithm which also seems to have a renaissance in the Deep Learning field as Deep Neuroevolution.

If you are familiar with Deep Learning skip this paragraph but if not: A DNN is simply put simulating the human brain with neurons connected with synapses. Artificial neural networks are basically lots of matrix computations where the trained data is stored as synapse weight vectors and tuned via lots of parameters like neuron activations functions, network structure, etc.

Those networks are trained via different ways but most common these days is supervised learning where lots of big data training sets are run through the DNN, a desired output is compared with the actual output and the error is then backpropagated until the actual output is sufficient. Once the DNN is trained it enters the inference phase where it is provided with unknown data sets and if it's trained correctly it will generalize and provide the right output on unknown inputs.

The basic techniques are quite old and were gathering dust but since a few years there is this renaissance when lots of training data became available, huge computing power in form of powerful GPUs and new specialized accelerators, plus AI researchers discovered GitHub and open source.

AI researchers, the question has to be: Should we do it, not can we do it.

We live in the era of Neural Networks AI and it only has just begun. Current AI systems are very specific and targeted at certain tasks but Artificial General Intelligence (AGI) is becoming more and more interesting with advances in Reinforcement Learning (RL) like Google's DeepMind AlphaGo challenge.

RL is just in its infancy but already quite scary if you look at some research from game development innovators like SEED literally using military training camp simulations to train self-learning agents.

They also applied it to Battlefield 1. There are a couple of things to improve but nevertheless impressive results were achieved which are at beyond AlphaGo.

Does everyone see an army of AI in this video or is it just me?

I'm sure the involved developers don't have bad goals in mind and I can see it being nicely suited for computer games AI but it's not just the age of Deep Learning but also real-time ray tracing is moving forward, so why not render photorealistic scenes and use that as input for your RL agent training which is then deployed to a real-world war machine. With the photorealistic quality of ray tracing endless real-world-like scenes can be synthesized solving the problem of getting enough training data.

Below is another video showing real-time ray tracing and self-learning agents. The video looks cute and all fun but think about if you replace the assets with a different scene.

Don't get me wrong it's super impressive and I'm all in for advancements in tech but we have to think about the implications in a broader context.

Oh wait, there's actually already a photorealistic simulation framework available to train autonomous drones and other vehicles, they just need to add real-time ray tracing now but I guess it's already in the works. I was getting a little worried but good that drones are only used in civilian scenarios.

But hey isn't it cute how Jeff Bezos is just taking his robot dog out for a walk?

We don't even have to look at the future of RL and AGI and scary robots with the fear of the singularity, we already have quite amazing achievements especially with LSTM and CNN type neural networks. Some of them outperform humans already. LSTMs are used for time-based information like speech recognition and synthesis and CNNs for computer vision tasks.

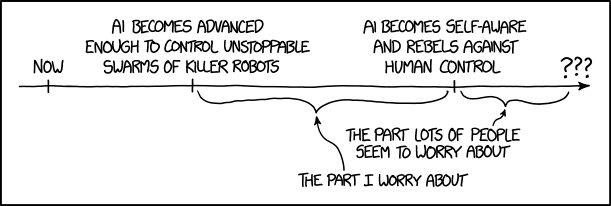

|

| The author of xkcd shares the same concerns |

The interesting and scary part is that artificial neural networks are almost a black box after being trained and can make implications the developer is not aware of. There's always that uncertainty with DNNs, at least for now and it can have huge implications for racial, gender and religious profiling even if that was not the intend of the developer/researcher.

AI researchers, wake up.

Ask yourself constantly: What are the implications? Should we do it?

Let's look at some more amazing examples and their implications

I could post tons of recent examples which are super cool if you wear the geek hat but which are super scary if you put on the ethics hat on and think a bit about their implications.

Autonomous vehicles are the future and I'm sure lots of us can't wait until we can relax during a long drive in our self-driving car but it might not be ready yet for tests out in the wild like Uber's deadly accident shows. No doubt that human drivers are worse even today but the perception is that AI is much better and it should be indeed. My guess is there's likely not enough training data available for all the edge cases yet since the object movement detection should have triggered a full stop but it might have been interpreted as a moving light shadow but the radar sensor should get it. We will see what the investigations find out but I still think it's too early for these kind of real-world tests.

NVIDIA has some amazing research ongoing with unsupervised mage-to-image translation. Just watch this demo video below and then think about if we can be sure if dashcam footage was really recorded during day or night.

Google's WaveNet and even more so Baidu's DeepVoice show impressive results for speech synthesis using samples of humans and then synthesizing their voice patterns. The amount of sample data needed to fake a person's voice is getting less and less, so not just public figures with lots of open samples but basically everyone can be imitated using text-to-speech.

It doesn't stop with audio synthesis. Researches from University of Washington made great progress with video synthesis. Play the embedded video and think about the implications this tech could have being in the wrong hands.

You might have heard about Deep Fakes videos being mainly used to generate fake celebrity porn but even worse things like this were created.

Well and of course video surveillance is getting a huge boosts since a couple of years due to great progress in face and object tracking Deep Learning AI. BBC tested a system in a Chinese city which is fully covered with AI-enabled CCTV cameras and it did not disappoint.

Make your own conclusion if it's a good or a scary thing that everyone is always tracked.

There's light!

It's not all just dark, there are of course as many good examples available that leverage modern AI for a good cause with little dark implications.

Impressive results were achieved for AI lipreading that beats professional, human lip readers which can help many people to live a better life.

Also huge advances are achieved in the medical field and especially in the computer vision tasks to automatically analyze radiology images like breast cancer mammography or improving noisy MRI data.

Also prominent companies like Google's DeepMind are beginning to realize the implications for humanity of their work and have started ethical initiatives.

And then there's Facebook!

It's crazy how ethical questions play a little role for large companies like Facebook which is hoarding billions of data sets from around the world which can be used for training. We even provide them not just the input data sets but even the training output with our likes, clicks but even just the scrolling behavior when you read. Plus the huge investments in Facebook's AI Research group hiring and growing some of the best AI talent in the industry.

Just look at some of the research areas of FB like DeepText which is super impressive and aims for "Better understanding people's interest". Now ask yourself for what? Ads? What kind of ads? What is an ad? Is a behavioral changing FB feed an ad?

And then you have companies like Cambridge Analytica who crawled/acquired the data and abuse it to sell their information warfare mercenary services to anyone changing human behavior and altering elections.

Real-world war machines might not be needed anymore, Big data + Deep Learning + Behavioral Psychology is a dangerous weapon if not the most dangerous.

It's good to see Mark Zuckerberg apologizing for the issues and we can only hope it will have real consequences and is actually still controllable at all.

And then there's YOU!

It's should not just be the Elon Musk's of the world warning about the impact of unethical AI, we as developers and researchers being at the forefront of technology have a responsibility too and need to speak up. It's about time, so please think about it and share your thoughts and raise your concerns.

The creator of Keras, a popular Deep Learning framework, a real expert shared his thoughts about Facebook and the implications of its massive AI research investments.

I couldn't agree more so let me finish this post here with his must-read Twitter thread:

And then there's YOU!

It's should not just be the Elon Musk's of the world warning about the impact of unethical AI, we as developers and researchers being at the forefront of technology have a responsibility too and need to speak up. It's about time, so please think about it and share your thoughts and raise your concerns.

The creator of Keras, a popular Deep Learning framework, a real expert shared his thoughts about Facebook and the implications of its massive AI research investments.

I couldn't agree more so let me finish this post here with his must-read Twitter thread:

The problem with Facebook is not *just* the loss of your privacy and the fact that it can be used as a totalitarian panopticon. The more worrying issue, in my opinion, is its use of digital information consumption as a psychological control vector. Time for a thread— François Chollet (@fchollet) March 21, 2018

Make sure to read the whole thread here.

There's a little twist to it since François works for Google but I expect he sticks to his own principles.

AI researchers, wake up! Say NO to unethical AI!

Labels:

AI,

Deep Learning,

Deep Neural Networks,

DeepFakes,

DNN,

Ethics

Wednesday, January 10, 2018

Big in Vegas! - HoloBeam at CES

Last year has been exciting for our Immersive Experiences team and we reached some nice milestones and coverage with our proprietary 3D telepresence technology HoloBeam.

I wrote a post for the Valorem blog with more details about the new version of HoloBeam we are showing at Microsoft's Experience Center (IoT Showcase) at CES 2018 in Las Vegas.

You can read it here:

HOLOBEAM CONTINUES TO PAVE THE WAY FOR IMMERSIVE TELEPRESENCE

I wrote a post for the Valorem blog with more details about the new version of HoloBeam we are showing at Microsoft's Experience Center (IoT Showcase) at CES 2018 in Las Vegas.

You can read it here:

HOLOBEAM CONTINUES TO PAVE THE WAY FOR IMMERSIVE TELEPRESENCE

Labels:

3D,

ar,

CES,

conference,

holobeam,

holograms,

hololens,

mixed reality,

MR

Subscribe to:

Comments (Atom)